Kubernetes makes it easy to run and deploy applications inside a cluster, but with every convenience, there comes the need for limitations and controls. Kubernetes provides the Network Policy feature, allowing you to control network traffic and enforce network isolation between your pods and services.

How can Network Policy help?

Network policies can improve your security by isolating networks and restricting connections between pods. If one of your services has a vulnerability, your other services will remain safe.

You can manage East-West traffic within your cluster to prevent unauthorized traffic between your pods.

You can support a Zero Trust environment by implementing 'deny all' rules and only allowing the necessary traffic.

You can use network policies in multiple scenarios. For example, you can limit database connections to only the apps that need them, isolate apps in specific namespaces to communicate with each other, and isolate services to support multi-tenancy between services deployed for different customers.

Kubernetes doesn’t support network policies by default; you need to use a CNI that supports them, such as Calico or Cilium.

How to write network policy?

Writing network policies is quite simple. The goal is to control traffic entering (ingress) and exiting (egress) your targets. You specify the targets and then define egress and ingress rules for them. Let’s dive into how to write the configuration.

The first step is to define the policy type: ingress, egress, or both.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

policyTypes:

- Ingress

- EgressNext, you must specify the pods you are targeting to apply the policy and limit ingress and egress on them. You can specify this by pod, namespace, or both. For example, you can target pods with specific labels or namespaces with specific labels.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

podSelector:

matchLabels:

app: gateway

namespaceSelector:

matchLabels:

environment: prod

policyTypes:

- Ingress

- EgressIf you want to match all pods or all namespaces, you can use {}. For example:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

podSelector:{}

policyTypes:

- Ingress

- EgressNext, you can define the type of traffic and the sources from which you want to receive traffic or send traffic. Let’s start with ingress rules. You can limit ingress traffic by pods, namespaces, or IP ranges.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

podSelector:

matchLabels:

app: database

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabel:

app: my-backend-service

example with pod selector ( only accept traffic from pods with specific label )

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

podSelector:

matchLabels:

app: database

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

role: debugexample with namespace selector ( only accept traffic form debug namespace)

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

podSelector:

matchLabels:

app: database

policyTypes:

- Ingress

ingress:

- from:

- ipBlock:

cidr: 192.168.0.0/16example with IP ranges

You can even limit the policy to specific ports and protocols, if your CNI supports it. For example:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

podSelector:

matchLabels:

app: postgres-database

policyTypes:

- Ingress

ingress:

- ports:

- protocol: TCP

port: 5432And the same applies to egress rules.

When you have multiple rules, you need to define the relationship between them. Do you want them to be logical 'AND' or 'OR'? Let’s go with this example: I want this pod to be able to send requests to the production and stage namespaces, but not others. So, the egress rule will allow traffic to either 'production' or 'stage', which is a logical 'OR'. It looks like this:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

podSelector:

matchLabels:

app: backend-service

policyTypes:

- Egress

egress:

- to:

- namspaceSelector:

matchLabels:

environment: production

- to:

- namspaceSelector:

matchLabels:

environment: stageFor a logical 'OR', you should write the rules in separate 'to' or 'from' sections as different rules.

Let’s go with this example: I want my target pod to only accept connections from a specific pod in a specific namespace.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: my-awesome-policy

namespace: default

spec:

podSelector:

matchLabels:

app: backend-service

policyTypes:

- Ingress

ingress:

- from:

- namspaceSelector:

matchLabels:

environment: production

- podSelector:

matchLabels:

app: front-endWhen you want a logical 'AND' relationship between rules, you must write them in the same 'to' or 'from' sections.

Practical examples

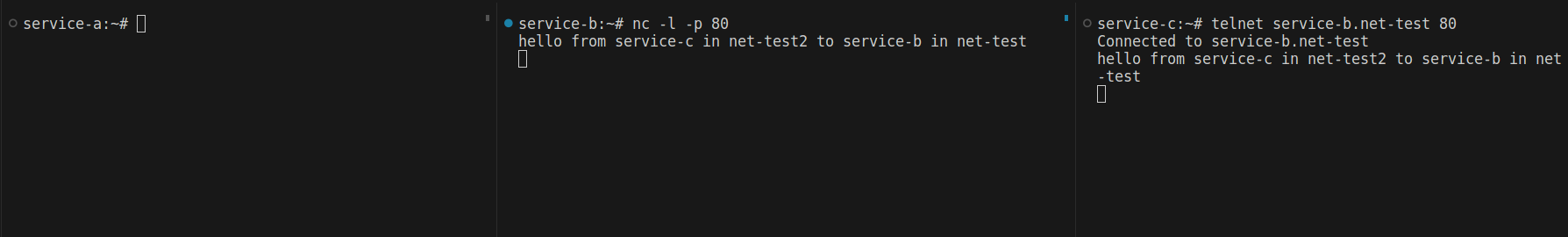

Now, let’s write some rules and test to see what happens when we apply network policies. We’ll deploy three pods, apply the rules, and control traffic between them.

We will deploy three pods using the netshoot image, which contains a variety of network debugging tools, along with three services to resolve them by name. The manifest is as follows:

apiVersion: v1

kind: Pod

metadata:

name: service-a

labels:

service-name: service-a

spec:

containers:

- image: nicolaka/netshoot

name: service-a

command: ["tail"]

args: ["-f", "/dev/null"]

---

apiVersion: v1

kind: Pod

metadata:

name: service-b

labels:

service-name: service-b

spec:

containers:

- image: nicolaka/netshoot

name: service-a

command: ["tail"]

args: ["-f", "/dev/null"]

---

apiVersion: v1

kind: Pod

metadata:

name: service-c

labels:

service-name: service-c

spec:

containers:

- image: nicolaka/netshoot

name: service-c

command: ["tail"]

args: ["-f", "/dev/null"]

---

apiVersion: v1

kind: Service

metadata:

name: service-a

spec:

selector:

service-name: service-a

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: service-b

spec:

selector:

service-name: service-b

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: service-c

spec:

selector:

service-name: service-c

ports:

- protocol: TCP

port: 80

targetPort: 80And I’ll apply them in the same namespace.

kubectl create ns net-test

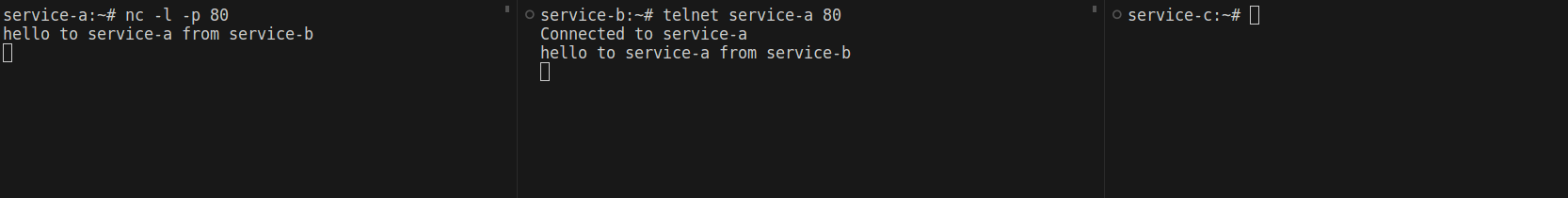

kubectl appliy -f services.yaml -n net-testI'll exec into service-a and run the nc -l command to listen on a port, then exec into service-b to send a TCP request to service-a.

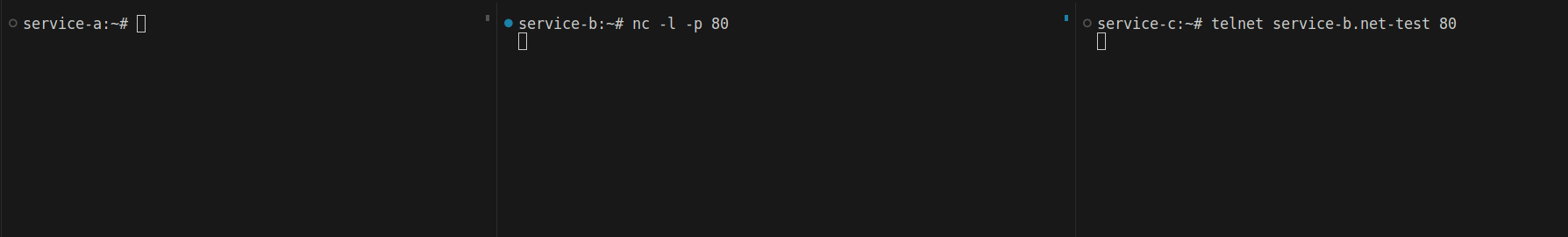

Now, let’s apply a Kubernetes network policy and see what happens. I’ll limit service-a to only accept traffic from service-b. The policy is as follows:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: services-limitations

namespace: net-test

spec:

podSelector:

matchLabels:

service-name: service-a

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

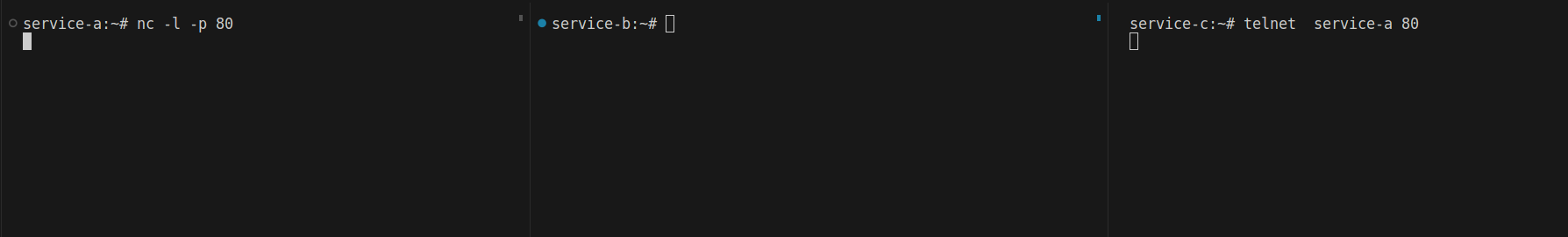

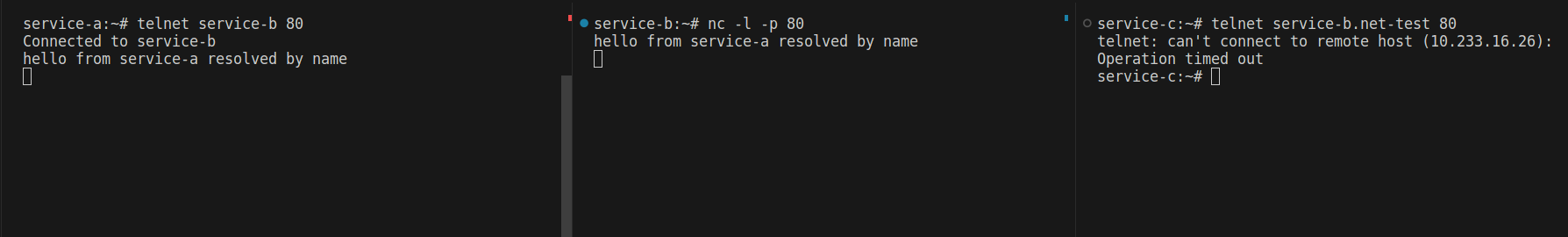

service-name: service-bNow, we can’t connect to service-a from service-c.

Service-b will still be able to connect to service-a, and service-c can communicate with other services as needed.

If I try to accept traffic in the opposite direction (from service-a to service-b), I can send requests. However, I want it to be a one-way connection from service-b to service-a, so I update the policy to the following, denying all egress from service-a. This way, service-a will only accept requests from service-b.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: services-limitations

namespace: net-test

spec:

podSelector:

matchLabels:

service-name: service-a

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector:

matchLabels:

service-name: service-b

egress: []

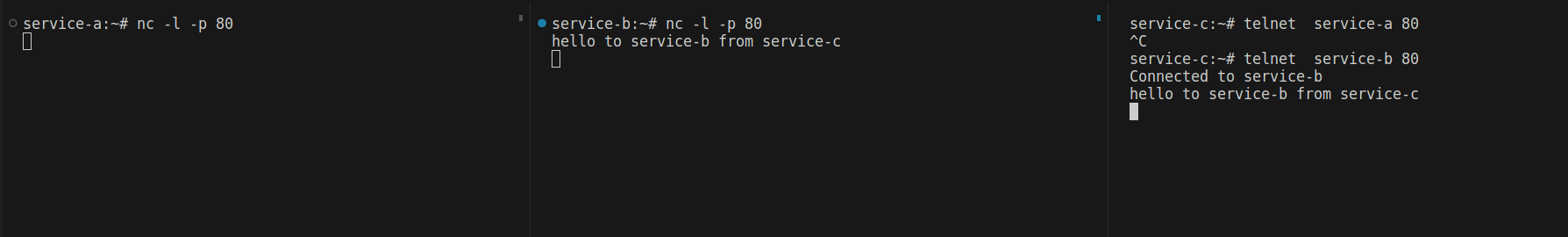

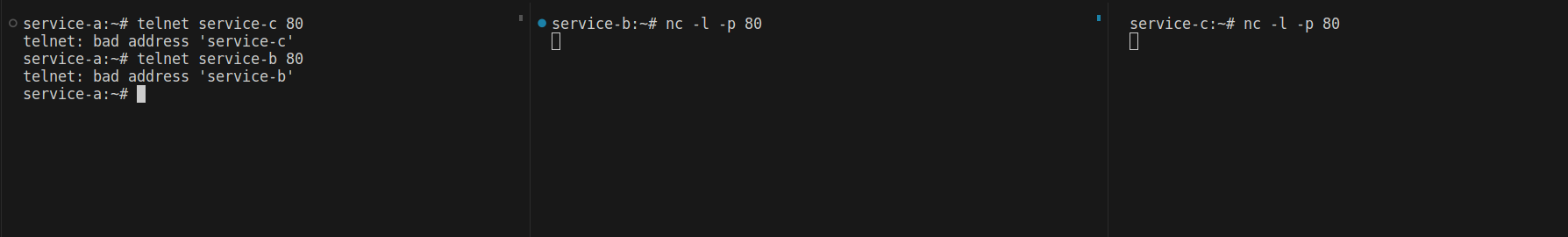

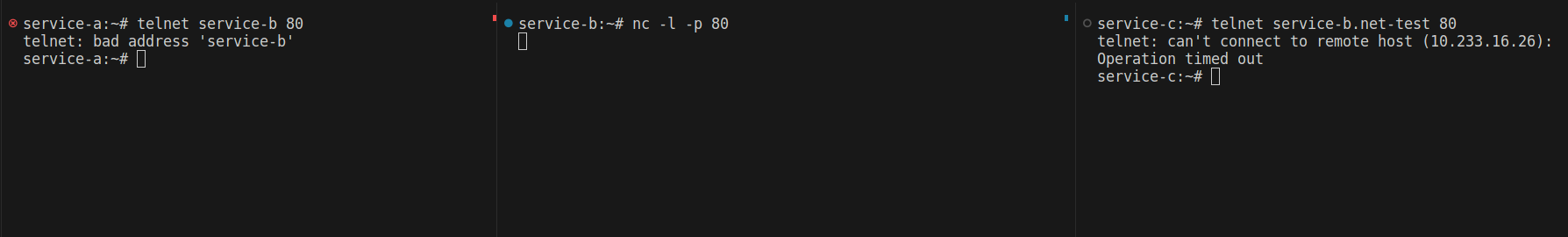

Now, I can't send any requests to service-b or service-c from service-a. Let’s try something fun. I want pods in the same namespace to be able to send and receive requests from each other, but not communicate with pods in other namespaces. First, I move service-c to the 'net-test2' namespace. Now, I can connect to service-b by specifying the namespace name.

So, let’s change the policy this way:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: services-limitations

namespace: net-test

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector: {}

egress:

- to:

- podSelector: {}The empty selector means all pods in the same namespace as the network policy. Now, if I try to connect to service-b from service-c, it won’t work.

Let’s try from service-a to service-b

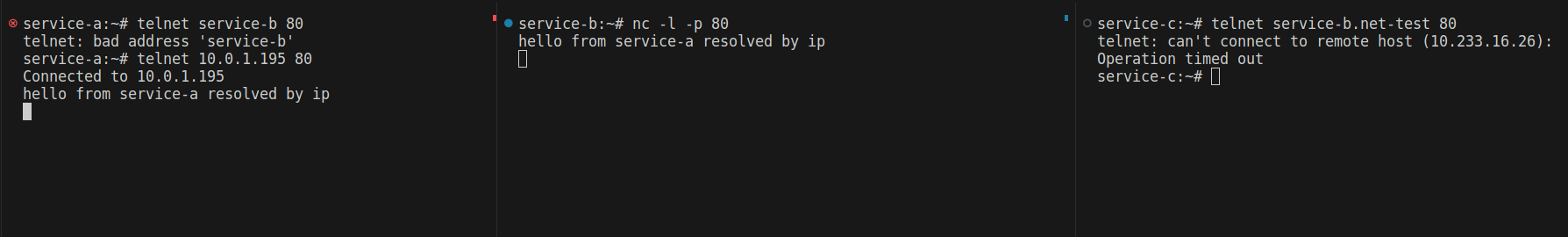

I cannot connect to service-b. But what happened? Why didn’t the policy work? The issue is that when you limit all egress traffic outside of the namespace, even DNS requests to CoreDNS won’t work. As a result, you can’t resolve services by their names—you have to specify the IP address.

How can we fix this? By allowing egress to CoreDNS and the same namespace.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: services-limitations

namespace: net-test

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

ingress:

- from:

- podSelector: {}

egress:

- to:

- podSelector: {}

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: kube-system

podSelector:

matchLabels:

k8s-app: kube-dns

ports:

- protocol: UDP

port: 53

Now, if we try again...

When applying egress rules, you must be aware of services like DNS or other operational pods that need to connect to the API server. You must allow them.

The next example is a real use case I had for network policies. I needed to support multi-tenancy inside my Kubernetes cluster, with each namespace representing a separate tenant. Tenants should be able to send requests within their own namespace, but only my Traefik gateway should accept connections from the ingress controller. This limits unnecessary unauthorized requests to services. Services in each tenant should not be able to send requests to other tenants, improving tenant isolation. Even if an intruder gains access, they won’t be able to connect to other tenants.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: isolation-policy

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- podSelector: {}

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: ingress

As you can see, ingress will only be allowed from the namespace named 'ingress,' where my ingress controller pod resides.

How do Network Policy affect performance?

The performance of network policies depends largely on the CNI (Container Network Interface) you’re using. Each selector creates a rule in the underlying CNI (iptables or eBPF), and these rules are applied sequentially. To optimize performance and reduce latency, it's best to match targets as early as possible. eBPF generally offers better performance than iptables. Tools like Hubble can help visualize traffic flow, while Prometheus is useful for measuring latency.

- Simplify Selectors: Group pods logically to reduce the number of selectors. For example, instead of

app1=a1, app2=a2, use a single selector likegroup=front-end. - Minimize Egress Rules: Use egress rules only when necessary, as they are more resource-intensive.

- Use Efficient CNIs: Go for eBPF-based CNIs like Cilium for better performance and scalability.

Policies For Unlabled Pods

Unlabeled pods or misconfigured deployments can inadvertently bypass your policies. To handle this, I suggest using a deny-all policy, though it might make debugging more challenging. Apply specific rules for such cases. For example, here’s a deny-all policy:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny-all

namespace: default

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

ingress: []

egress: []Next, let's apply a rule to control the network access of debugging or staging tools. This helps limit their reach to only what's necessary for troubleshooting or testing.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-debugpods

namespace: default

spec:

podSelector:

matchLabels:

app: my-service

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

role: debuggerConclusion

Network policies are a powerful tool for securing and managing traffic within your Kubernetes cluster. By carefully crafting policies, simplifying selectors, minimizing egress rules, and choosing the right CNI, you can significantly enhance security and performance. Always consider edge cases like unlabeled pods and misconfigured deployments, and apply thoughtful rules for tools like debugging or staging environments. With the right approach, network policies help maintain isolation, control, and efficiency within your cluster, ensuring a more secure and scalable infrastructure.

Thank you for reading! I hope this guide helps you better understand how to implement and optimize Kubernetes network policies. If you have any questions or tips, feel free to reach out. Let’s continue learning and improving together!